If there is one true indicator to measure the disruptiveness of a new

technology, it’s certainly the public outpouring of fear and suspicion.

If we use societal angst as a measure, the current renaissance of

artificial intelligence (AI) is a good candidate for groundbreaking

technological disruption. AI will change life as we know it, as Elon Musk,

Bill Gates, Stephen Hawking and other great minds have told us. The widespread

anxiety about the

harmful consequences of AI applications

is not an unparalleled reaction to technological change but rather an

expression of the societal unease that commonly precedes the changes

associated with new technologies and the vast potential that comes with them.

We’re looking beyond today’s IoT, toward a future where smart

connected devices not only talk with each other but where they use AI to

interact with each other on our behalf. This new global fabric of artificially

intelligent things one day will be known as the AIoT,

the Artificial Intelligence of Things.

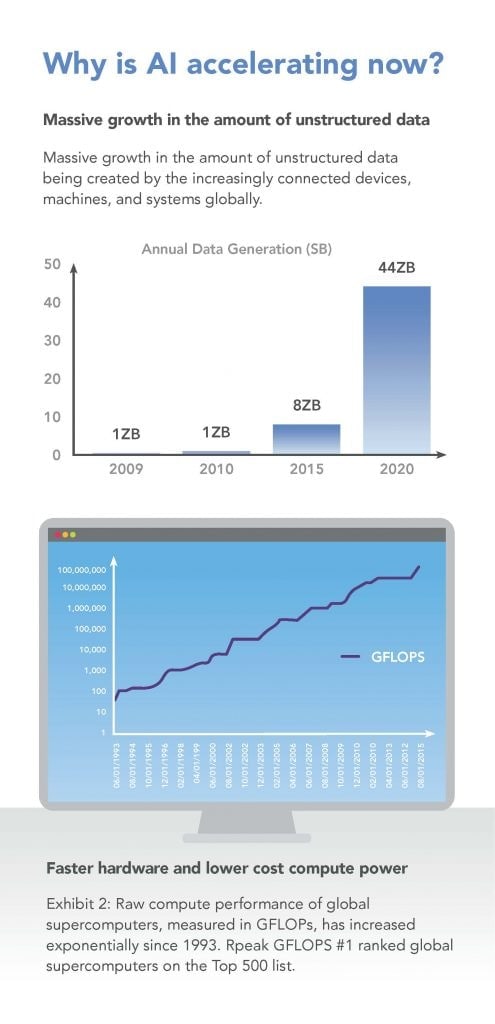

When pure mathematics start to make an impact on real life

As a discipline of mathematics – and, to a certain extent, of

philosophy – AI lived in the shadows for more than six decades before

public interest suddenly soared in the present period. One reason for the

current publicity is that, for a long time, considerations on AI applications

were purely theoretical or at least science fiction. For artificial

intelligence use cases to become real in the present IoT environment, three

conditions had to be fulfilled:

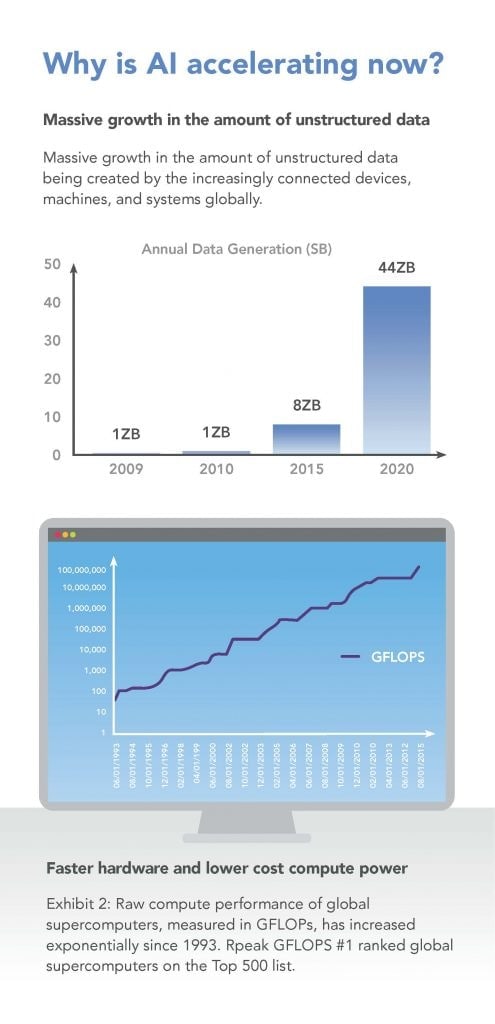

- very large real-world data sets

-

a hardware architecture and environment with significant processing

capability

-

the development of new powerful algorithms and artificial neural networks

(ANN) to make best use of the above

It is apparent that the two latter requirements depend on each other, and that

the breakthroughs in deep neural nets could not have occurred without a

significant increase in processing power. As for the input: large data sets of

every quality – vision, audio and environmental data – are being

generated by an increasing number of embedded IoT devices. Today, the flow of

data grows exponentially. In fact, annual data generation is expected to reach

44 zettabytes (one zettabyte is 1 billion terabytes) by 2020, which translates

to a compound annual growth rate (CAGR) of 141 % over 5 years. Just 5 years

after that, it could reach

180 zettabytes.

Starting around 2015, when multicore application processors and graphics

processing units (GPUs) became widely available, we’ve also commanded

the tools to cope with these amounts of data. Parallel processing became a

much faster, cheaper and more powerful business. Add fast, abundant storage

and more powerful algorithms to sort and structure that data, and suddenly

there is an environment in which AI can prosper and thrive.

In 2018, neural network-trained AI voice recognition software is an integral

part of a variety of consumer and industrial applications.

Computing power is increasing by roughly a factor of 10 each year, mainly driven by new classes of custom hardware and processor architecture.

This computing boom is a key component in AI progress and helping to make AI

mainstream in the future. And this is just the beginning.

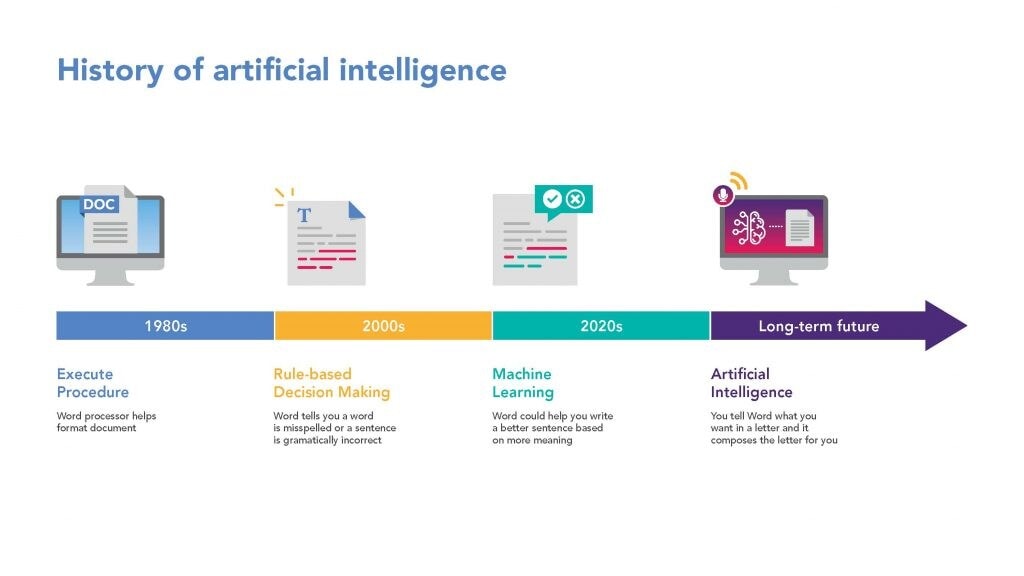

AI: Objects to reflect human reasoning

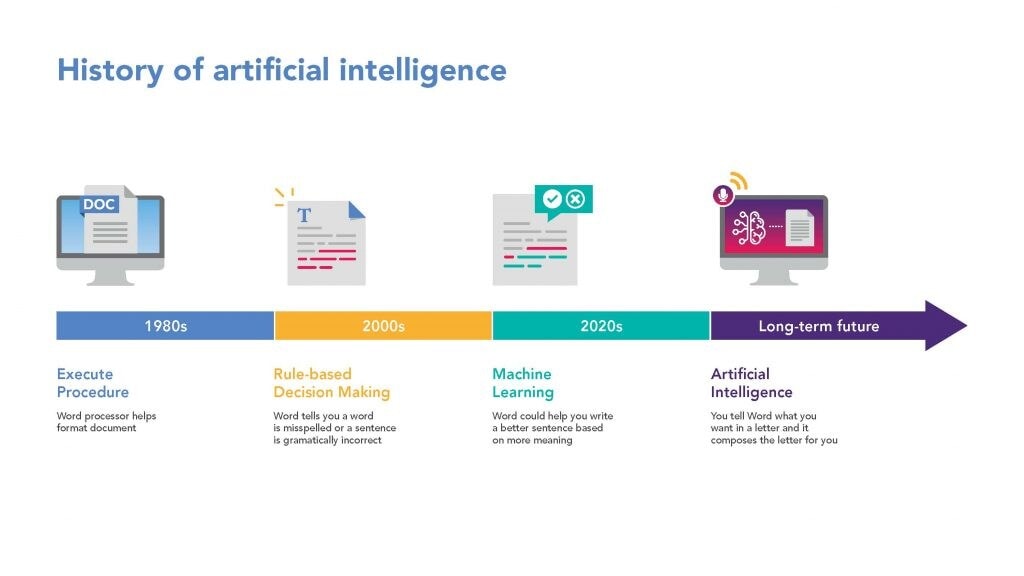

By classic definition, artificial intelligence is a rather unspectacular

affair. In his groundbreaking 1976 paper

Artificial Intelligence: A Personal View, British neuroscientist and AI pioneer David Marr states: The goal of

AI is to identify and to solve useful information processing problems and to

give an abstract account of how to solve it, which is called a method.

The small but decisive detail with AI is that the information processing

problems it deals with have their roots in aspects of biological information

processing. In other words: AI looks to re-engineer the structure and function

of the human brain to enable machines to solve problems the way humans would

– just better.

Compared with the scientific effort, today’s industry’s approach

on AI is much more pragmatic. Rather than trying to achieve a replica of the

human mind, current AI development uses human reasoning as a guide to provide

better services or create better products. But how does that work?

Let’s have a look at the current approaches.

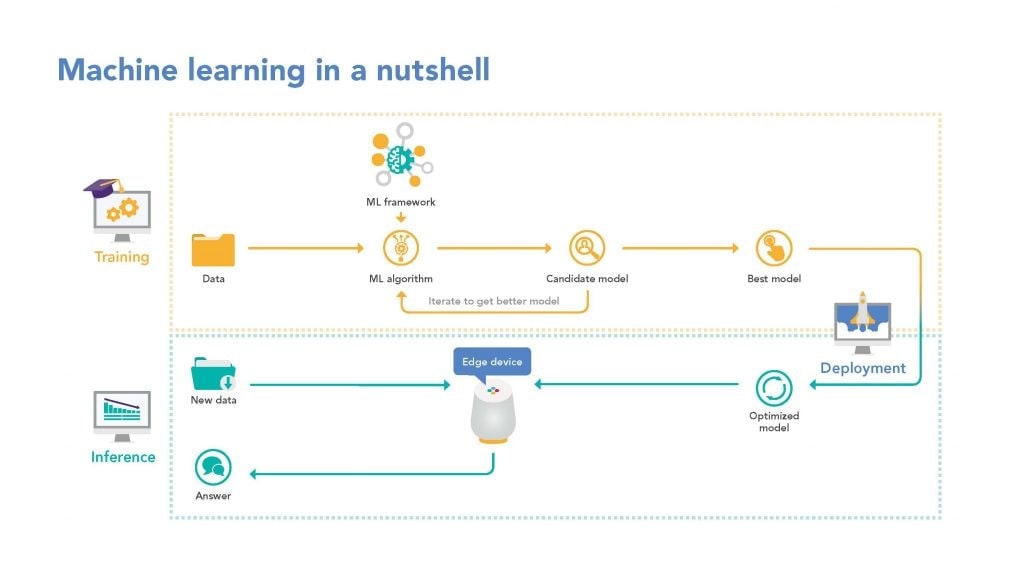

ML: Algorithms to parse, learn, determinate or predict

As a subset of artificial intelligence, machine learning uses statistical

techniques to give computers the ability to learn without being explicitly

programmed. In its most crude approach, machine learning uses algorithms to

analyze data and then makes a prediction based on its interpretation. To

achieve this, machine learning applies pattern recognition and computational

learning theory, including probabilistic techniques, Kernel methods and

Bayesian probabilities, which have grown from a specialist niche to become mainstream in current ML

approaches. ML algorithms operate by building a

model

from an example

training set

of input in order to make data-driven predictions expressed as outputs.

Computer vision is the most active and popular application field where ML is

applied. It is the extraction of

high-dimensional

data from the real world to produce numerical or symbolic information –

ultimately in the forms of decisions. However, until very recently, an

extensive portion of hand coding was involved for machines to develop advanced

pattern recognition skills. Human operators had to extract edges to define

where an object begins and where it ends, apply noise removing filters or add

geometrical information, e.g., on the depth of a given object. It turned out

that even with advanced machine learning training software, it’s not a

trivial task for a machine to make real sense of a digital reproduction of its

environment. This is where deep learning comes into play.

DL: Mirroring of the human neuronal network

It’s a decades’ old idea that software can simulate a biological

neocortex’s array of neurons in an artificial “neural

network”. Deep learning algorithms attempt exactly that – to

mimic the multilayer structure and functionality of the human neuronal

network. In a real sense, a deep learning algorithm learns to recognize

patterns in digital representations of sounds, images and other data. But how?

With the current improvements in algorithms and increasing processing

capacity, we can now model more layers of virtual neurons than ever before,

and thus run models in much greater depth and complexity. Today, Bayesian deep

learning is used in multilayer neural nets to tackle complex learning

problems.

However, what we can do today still mostly falls into the concept of

“narrow” or “weak” AI

– technologies able to perform specific tasks as well as, or better

than, humans can. For instance, AI technologies for image classification or

face recognition perform certain facets of human intelligence, yet not the

full spectrum or even a combination of several human capabilities. A machine

capable of performing a multitude of complex tasks, one that exhibits behavior

at least as skillful and flexible as a human being, would be considered

“strong AI”. While the experts are divided over the question

whether strong AI can ever be achieved, it doesn’t stop them from

trying.

Since 2013, the external investment growth in artificial intelligence has

tripled

Since 2013, the external investment growth in artificial intelligence has

tripled

One solid indicator for the disruptive potential of the technology is the

dimension of investment. According to McKinsey,

$26 to 39 billion

were invested in AI in 2016, most of it by tech giants such as Google™

and Baidu™. Being one of the most well-funded categories, Startups in

the space

increased about 141 %. Recognizing the industry’s enormous potential, governments around

the globe aim to put themselves in the top positions of AI. While a notable

amount of national AI plans are in creation, the greatest economic gains are

expected for China (26 % boost to GDP in 2030) and North America (14.5 %

boost), accounting for almost 70 % of the global impact.

According to recent studies, Artificial Intelligence has the potential to

almost double the value of the digital economy to

US$ 23 trillion by 2025. From a strategic viewpoint, AI’s biggest potential is seen in its

complementary nature to the IoT. An integrated technology portfolio creates a

powerful new platform for digital business value.

Advancing AI to the Edge I:

High Performance Processing to do the Job

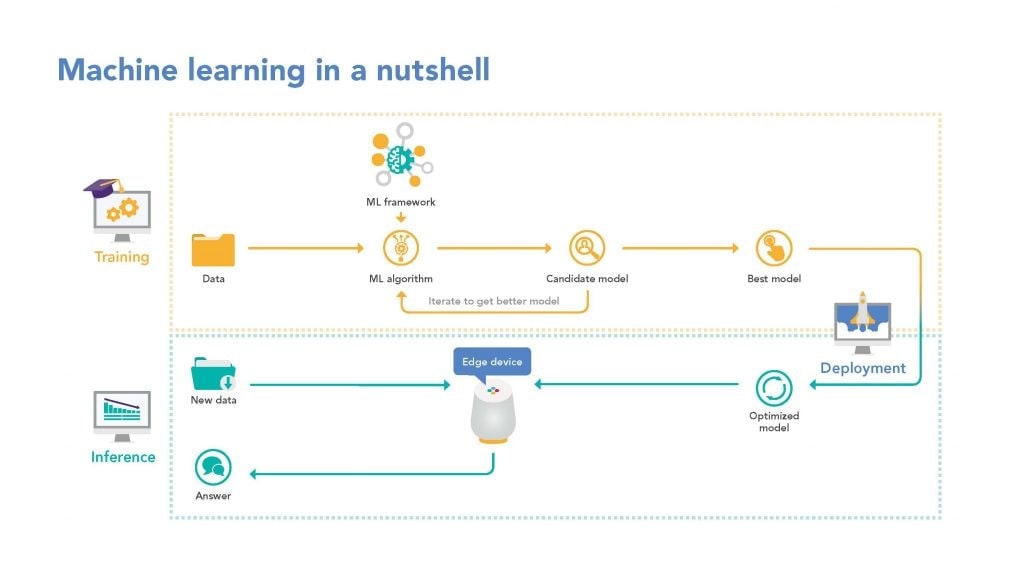

As we have seen, for AI to unfold its massive potential relies heavily on

adequate hardware. Machine learning, in particular, requires enormous

processing and storage capacity. A training cycle for one of

Baidu’s speech recognition models

for instance, requires not only four terabytes of training data, but also 20

exaflops of compute — that’s equivalent to 20,000 quadrillion

math operations per second — across the entire training cycle. Given

its hunger for powerful hardware, it is no wonder that AI today is still

mostly confined to data centers.

Uncoupling AI from the data centers and advancing it to the endpoints of the

IoT will allow us to tap its full potential. Edge processing has taken the

control of computing applications, data and services away from some central

nodes (the “core”) to the periphery of the Internet. Processing

this data at the edge, significantly decreases data volumes to be moved,

thereby increasing privacy, reducing latency and improving

quality of service.

No longer relying on a central core also means the removal of a major

bottleneck and potential single point of failure. Edge processing is based on

distributed resources that may not be continuously connected to a network in

such applications as autonomous vehicles, implanted medical devices, fields of

highly distributed sensors and a variety of mobile devices. To make use of AI

in this challenging environment, an agile application that can retain learning

and apply it quickly to new data is necessary. This capability is called

inference: taking smaller chunks of real-world data and processing it

according to training the program has done.

For inference to work in edge environments, processing architecture and

hardware are required that are optimized and come with certain requirements on

processing capacity, energy efficiency, security and connectivity. NXP has

established leadership in machine learning at the edge – particularly

for the inferencing tasks. The NXP portfolio covers almost the entire MCU

and application processors portfolio that is used in modern AI applications:

i.MX 6, 7 and 8 product families, Kinetis MCUs and Low Power Cortex,

QorIQ® communications processor portfolio

and S32 MCUs and Microprocessor Units. In fact, we have been ranked as one of

the world’s top three artificial intelligence chipset companies.

Advancing AI to the Edge II: Dedicated Machine Learning Environment

To build innovative AI applications with cutting-edge capabilities, developers

depend on a machine learning software environment that enables easy

integration of dedicated functionalities into consumer electronics, industrial

environments, vehicles and other embedded applications, in general. But to

roll out AI-based business models at a much broader scale and to make AI

applications available to billions of end users across all verticals, the

industry must first overcome past limitations.

This is why NXP has developed machine learning hardware and software, which

enables inference algorithms to run within the existing architecture. The NXP

ML environment enables fast-growing machine learning use-cases in vision,

voice, path-planning and anomaly detections and the integration of platforms

and tools for deploying machine learning models, including neural networks and

classical machine learning algorithms, on those engines.

And this is only the first step, as NXP is already working to integrate

scalable artificial intelligence accelerators in its devices that will boost

machine learning performance by at least an order of magnitude.

A Glimpse into the future: Artifical Intelligence of Things

By designing things with smart properties and connecting them into the

Internet of Things, we have created a global web of assets that have enhanced

our lives and made them easier and more secure. The IoT gave us eyes and ears,

and even hands, to reach out from the edge of the network into the physical

reality where we gather raw information, which we stream to the cloud, where

it’s processed into something of superior value: applicable knowledge.

By adding high-performance processing, we’ve started to process and

analyze information less often in data centers and the cloud, and more now at

the edge, where we see the magic occur. We witness that magic in smart traffic

infrastructure, in smart supply chain factories, on mobile devices, in

front-end stores and in real time, where all the action takes place that makes

our lives colorful.

The IoT in its present shape has equipped us with unprecedented opportunities

to enrich our lives. Yet, it is only a stopover on the way to something even

bigger and more impactful. We are talking about the artificial intelligence of

things.

Today’s smart objects, even though they stream data, learn our

preferences and can be controlled via apps, they are not AI devices. They

‘talk’ to each other, yet they don’t play together. A

smart container that monitors the cold chain of a supply of vaccines is not an

AI system unless it does ‘something’, such as making a

prediction about the temperature development in the container and

automatically adjusts the cooling.

An autonomous car or a search-and-rescue drone that autonomously navigates

off-shore is in fact an AI system. If it drives or flies on behalf of you, you

can trust that some serious AI capabilities are involved. Reading, speaking or

translating language, predicting the mass and speed of an object, buying stock

on your behalf, recognizing faces or diagnosing breast cancer, are all

artificially intelligent characteristics when done by an algorithm.

Now, imagine a world in which the

entirety of AI things was connected. Expanding the edge of the IoT with cognitive functions such as learning,

problem-solving and decision-making would turn today’s smart things

from mere practical tools into true extensions of ourselves, multiplying our

possibilities to interact with the physical world.

As an integral part of the IoT, artificial intelligence is the foundation for

entirely new use cases and services. Siemens®, for example, is using AI

to improve the operation of gas turbines. By learning from operating data, the

system can significantly reduce the emission of toxic nitrogen oxides while

increasing the performance and service life of the turbine. Siemens

is also using AI systems to autonomously adjust the blade angle of downstream

wind turbines to increase the plant’s yield.

GE’s drone and robot-based industrial inspection service,

Rolls-Royce® IoT-enabled airplane engine maintenance service and

Duplex’s AI voice

are other examples to prove the move towards the AI of things.

Now, what does this mean for the future?

The truth is, even with a broad range of nascent AIoT applications emerging,

we can’t even fathom what else is coming. One thing is for sure, though

– today’s digital age society is undergoing a fundamental

change. The paradigm shift that comes with the convergence of AI and the IoT,

will be even greater than the one we have witnessed with the introduction of

the personal computer or the mobile phone. NXP is driving this transformation

with secure, connected processing solutions at the edge, enabling a boundless

multitude of applications in the future AIoT.

Continue reading part II of this blog series under

this link

to learn about AI implications for IoT Security.

Since 2013, the external investment growth in artificial intelligence has

Since 2013, the external investment growth in artificial intelligence has