Having examined how the shift of high performance processing from the cloud to

the edge of networks has enabled the Internet of Things (IoT) to thrive, and

consequently laid the foundations for AI in

this

blog article, there is one more aspect of AI that requires our full attention:

Progress in AI is closely related to the development of cyber threats.

Every second, five new malware variants are discovered. Organizations across

the globe are hit by one hundred previously unknown malware attacks every

hour. Every day, one million new malicious files appear in the connected

world. With ever more devices and systems connected to the web, cybercrime has

become an increasing threat to our technological assets – and to the

safety of our society as a whole.

Machine learning proves to be a double-edged sword: While ML enables

industry-grade malware detection programs to work more effectively, it will

soon be used by the bad actors to enhance the offensive capabilities of their

attacks. As a matter of fact, a group of researchers from the

University of Amsterdam

recently demonstrated how this can work. In their side-channel attack that

leaked information out of the CPU’s translation lookaside buffers

(TLBs), the white-hat hackers used novel machine learning techniques to train

their attack algorithm and bring it to a new level of performance. They are

confident that machine learning techniques will improve the quality of future

side-channel attacks.

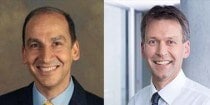

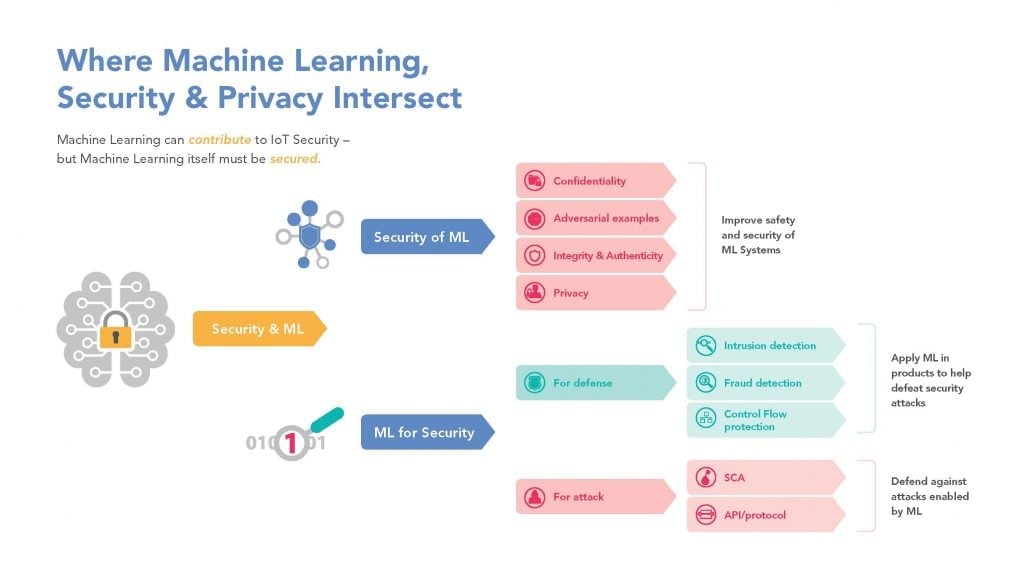

In order to prevent the emergence of new, effective AI and ML techniques from

changing the balance of power, we must focus on how to leverage artificial

intelligence to improve system security and data privacy.

How ML can add to system security

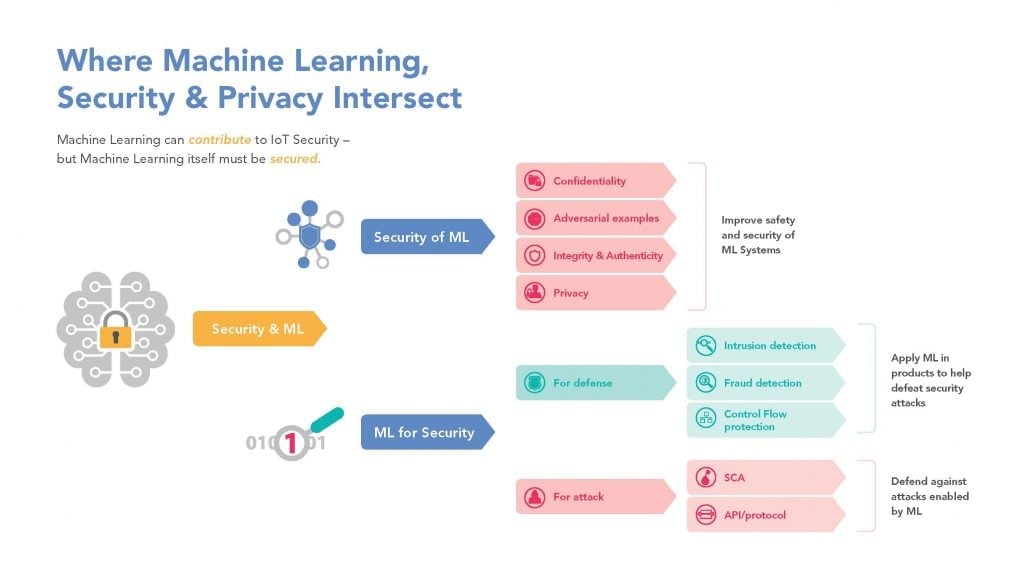

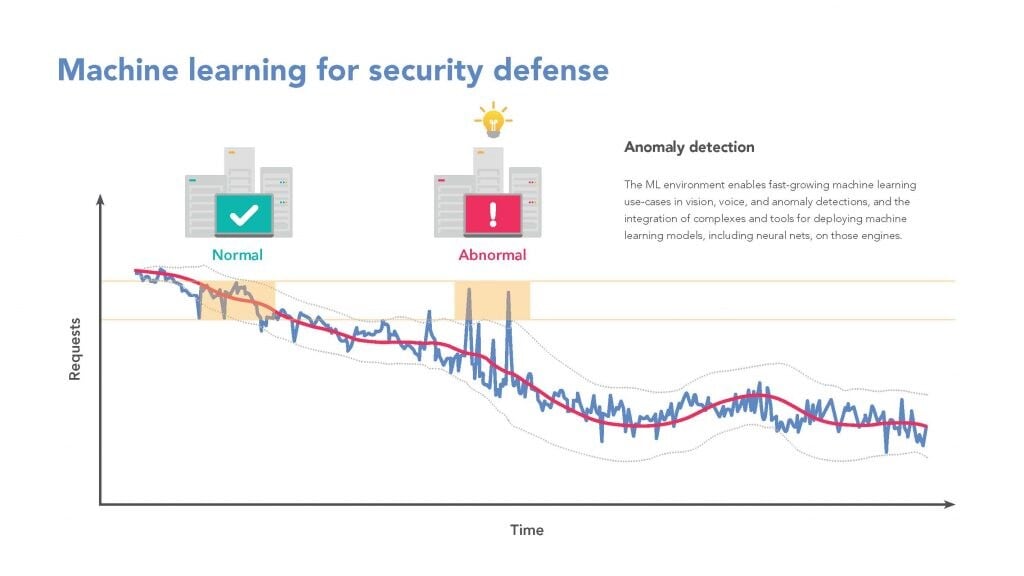

A good example of ML-based security is anomaly detection, where the system

“detects” anomalous behavior or patterns in the data stream.

This process has been routinely applied to SPAM and malware detection in the

past, but machine learning can be expanded to look for more subtle and complex

anomalous behavior in a system. While monitoring and protecting from external

threats is crucial for an effective system defense, few organizations are

aware of inside threats. In a survey from

Accenture in 2016, they found that two thirds of the surveyed organizations fell victim to

data theft from inside the organization. In these instances, 91% reported that

they did not have effective detection methods for identifying this type of

threat. Machine learning can significantly aid in the development of

effective, real-time profiling and anomaly detection capabilities, to detect

and neutralize user-based threats from within the system.

Privacy-preserving machine learning

It’s easy to identify applications in which the data providers for AI,

either in the training phase or in the inference phase, do not want to provide

their data unprotected. With the new EU General Data Protection Regulation

(GDPR) in effect since May 25, 2018, privacy protection is mandatory for any

business dealing with the data of EU citizens, and non-compliance can result

in heavy fines.

There are also applications in industrial environments where data privacy is

crucial to system providers. For example, in predictive maintenance machine

data is used to determine the condition of in-service equipment to precisely

predict when maintenance should be performed. This approach achieves

substantial cost savings

over routine or time-based preventive maintenance because tasks are performed

only when required and hopefully in advance of system failure. Machine owners

participating in the service have a clear intent to benefit from the generated

data, however, they also have a strong interest in not sharing their data with

competitors using the same machines. This puts the maintenance service

provider in a dilemma. The key question is: How can businesses continue to

respect privacy concerns while still permitting the use of big data to drive

business value?

Using homomorphic encryption, any data being used in the computation remains

in the encrypted form, and only becomes visible to the intended user. The

result of the computation – once decrypted – matches the result

of the same computation applied to the plaintext. In addition, attribute-based

authentication as used by NXP, is based on the Identity Mixer protocol

developed at IBM® Research. It relies on a combination of flexible public

keys (pseudonyms) and flexible credential that allows a user to share only the

information required for a certain transaction, without revealing any other

attributes.

The advantages are obvious: “The Internet is like the lunar surface

— it never forgets a footprint. With Identity Mixer, we can turn it

into a sandy beach that regularly washes everything away,” says

Jan Camenisch, cryptographer and co-inventor of Identity Mixer.

Attacking machine learning: Attacks at training time

Attacks can occur even in the first phase of ML when the training data is

collected and being fed into the ML model. In order to prevent changing the

data or manipulating the outcome of the ML model a typical application is an

anomaly detection tool trained from data sent by users. If a user

“poisons” the training data by purposely sending incorrect

inputs, this may result in inferior performance or even failure of the machine

learning model at inference time.

Attacks at Inference Time: adversarial examples

The user’s privacy must also be guaranteed during the inference phase.

This is especially relevant when inference is done on private or sensitive

data. The user can also be the attacker. As a means of attack, the user may

employ so called adversarial examples. An adversarial example is a valid input

data that will cause the machine learning model to misinterpret it. This

seemingly benign attack can create catastrophic consequences, for instance, if

we think of road sign classification in safety critical situations.

By attaching a specially crafted sticker on a stop sign,

researchers have shown

that they can trick the image classifier into misinterpreting or not

recognizing the sign at all. While the sign appears as regular stop signs to

the human eye, the machine learning model is unable to see it as such. The

concept of adversarial examples is not new. What is new is the severity of

their consequences, like crashing an autonomous car in the stop sign

adversarial example.

ML as a service: IP protection

The value of machine learning models mostly resides in the associated data

sets. Training data can be very expensive to collect or difficult to obtain.

When machine learning is offered as a service, the user has access only to the

inputs and the outputs of the model. For example, in the case of an image

classifier, a user submits an image and gets in return its category. It might

be tempting for a user to make a copy of the model itself to avoid paying for

future usage. A possible attack is to query the service on chosen input data,

obtain the corresponding output and train the so-obtained data-set to get a

functionally equivalent model.

Why now is the time to check your defense mechanisms

At NXP, we built some of the most sophisticated secure devices in the world.

We create countermeasures inside them to protect them against a broad range of

logical and physical attacks, such as side-channel or template attacks.

It’s only a matter of time when hackers will rely on AI to extract

secrets and critical information from secure systems, as it only enhances

their “learning” capabilities.

To ready ourselves against these evolving threats, the implications of AI must

become an integral part of IoT system security. If training and inference of

AI machine learning models are not to become a wide-open gate for future

adversaries, security by design and privacy by design principles must be

considered from the beginning. Fortunately, it’s not too late for the

AI realm to apply the lessons already learned from IoT security. If we keep

this in mind when designing the infrastructure and devices of the future, the

AIoT holds the power to transform our lives. And it’s upon us, to turn

the black box of the future into a bright one.