By applying cloud-computing technology found in massive data centers to

smaller-scale IoT deployed in the field, edge computing will revolutionize

embedded systems. Mouse clicks will be all that is needed to deploy new

software. The wealth of data generated by IoT endpoints will be speedily

analyzed and acted upon. Systems will be deployed quickly and securely managed

and operated. Edge computing ultimately will enable rapid development and

deployment of novel services, raising the embedded world’s innovation

rate.

To understand how, look at how the cloud has transformed information

technology (IT). Companies can now provision servers with a click of the mouse

instead of physically installing hardware. Software infrastructure such as

databases can be provisioned as simply. Big data sets are now easy to handle.

Applications can be scaled up and down depending on load. Cloud computing, for

the most part, has been centralized and operated by a specialized cloud

service provider, such as Amazon Web Services. Enterprises are starting to

explore private clouds for IT workloads, and a hybrid approach joins private

and public clouds.

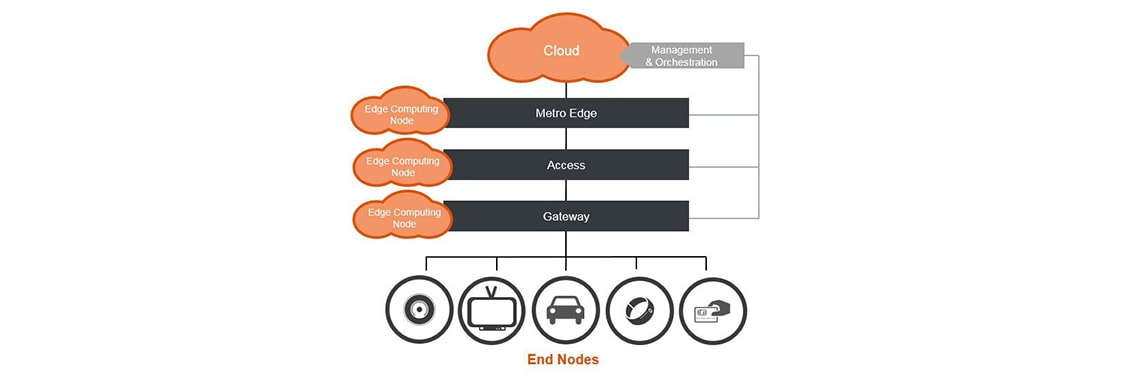

In the networking sphere, the idea of placing compute nodes in the access

network has been around for a while. At first, people didn’t know what

to do with the idea. The usually cited use was for caching. If that’s

the only use, deploying a dedicated caching appliance is much simpler.

Subsequently, along came Network Function Virtualization, NFV, which applies

cloud technology to implement network functions. These functions can be hosted

in a data center meaning in the IT cloud, or in the edge network alongside

routers, in the access network or at customer premises.

The term edge computing refers to cloud-style computing at any of these

locations outside a big data center, and it’s now apparent that it can

be used for IoT generally and not only for networking. Cloud management and

orchestration technology can be used to provision new applications within the

IoT in the same way as IT managers spin up servers in the cloud. An IOT node

can access basic services like storage and databases in the same standardized

way they’re accessed in the cloud. Big data sets generated by IOT nodes

can be processed close by, reducing the latency between analysis and action

and the amount of data that otherwise would have to flow through fat pipes to

a cloud data center. In summary, edge computing is a big deal.

The Edge Computing Ecosystem

Various companies are developing edge computing. Symbiotic with public

networks, edge computing has an industry specification group within the

influential

European Telecommunications Standards Institute (ETSI). ETSI states that multi-access edge computing1

“is a natural development in the evolution of mobile base stations and

the convergence of IT and telecommunications networking.” The

organization has published various specifications and guidelines on the topic.

Various network equipment vendors have offered up their visions as well.

Cloud companies—Amazon, Google, Microsoft, Alibaba, etc.—are

also developing edge computing. Having built their core businesses on data and

the software for processing it, their focus is on extending these technologies

to the IOT. Coming from the industrial automation side, Siemens has developed

MindSphere, a cloud-based platform for IoT applications.

The starting point for most of these initiatives is to provide SDKs and APIs

for applications on IoT nodes to access services hosted in the cloud, that is,

in a remote data center.

The next step is for the services to run outside the data center in edge

computing nodes. Ideally these nodes’ resources and those in the data

center are completely fungible. Applications do not know where they reside and

their location can move as needed. The applications themselves can run on an

IoT node, or alongside services functions on an edge-computing node such as an

IoT gateway. The latter case especially makes sense if the IoT node is

constrained (meaning it is incapable of hosting services).

Amazon’s

AWS Greengrass

is a leading software framework for edge computing. It works in conjunction

with the AWS IoT Device SDK, which enables constrained devices to interact

with their environment and invoke services in the cloud or at an unconstrained

device (for example, an edge-computing node like an IoT gateway). This unconstrained

device has persistent storage and runs the AWS Greengrass core, which provides

the services, hosts software functions and manages communications with

devices. Importantly, the device running Greengrass can sync and interact with

the cloud, providing the fungibility of resources discussed above.

Microsoft’s

Azure IoT Edge

is similar. I personally expect companies that only offer SDKs and APIs for

accessing remote services (analogs to the AWS IoT Device SDK) to implement

something like AWS Greengrass, enabling them to fully harness the power of

edge computing.

NXP’s Role

Although edge computing provides a consistent software view between cloud

services hosted on edge-computing nodes and in data centers, the underlying

hardware is fundamentally different. Cloud data centers are renown for massive

scale and homogeneity. Each discrete piece of the data center is beefy

(compared to an embedded system) and replicated many times. Because of how

server processors are priced, individual compute nodes have two (as opposed to

more) processors. Hundreds of these processors may be in a rack, tens of racks

in a row and tens of rows under the same roof, with multiple sites networked

together.

Edge nodes, on the other hand, will vary in scale and often are a single

hardware instance. Customer-premises equipment may be optimized for cost and

contain a single processor, such as NXP’s QorIQ® Layerscape LS1043

processor. Fast, but still low power, Layerscape processors such as the LS2088

processor could reside at an access node, such as a base station. Because of

this variation in scale, the ideal processor supplier offers many compatible

processors at different price/performance points, as NXP does with its 64-bit

ARM-based Layerscape line.

What sets apart NXP’s Layerscape-based solutions is security. The cloud

has proven secure. Although hackers have attacked many web-based companies,

they’ve exploited flaws in the companies’ applications and

business practices and not flaws in the underlying cloud services. The IoT,

however, has been a nightmare and devices have routinely been hacked. To be

successful, the edge must be at least as secure as the cloud and, ideally,

the edge can secure IOT nodes.

NXP’s platform trust architecture implemented in Layerscape processors

can help secure IoT devices throughout their lifecycle—manufacturing,

commissioning, operation, update and decommissioning. We’ve discussed

applying this trust architecture in the context of conventional embedded

systems that are programmed during manufacture.

Importantly, NXP’s platform trust architecture helps companies automate

the deployment of IoT and edge nodes. As noted above, a benefit of cloud

technology to IT managers is the speed of provisioning servers owing to

automation. Given the almost uncountable number of IoT devices forecasted to

be deployed, manually generating and installing certificates and software is

an untenable approach to provisioning. NXP’s trust platform has each

Layerscape processor generate unique key pairs based on its unique ID number

and other parameters. These key pairs can then be used to set up a secure

connection with a cloud-based provisioning service to exchange certificates

and other information required by Greengrass or a similar framework to

bootstrap that framework’s security regime.

NXP urges all suppliers of IoT and cloud technology, be they focused on

consumer or industrial applications, to engage with us in developing secure

edge computing solutions. We’ve seen how cloud technology has

revolutionized IT. Let’s bring the revolution to the embedded

world.

1ETSI originally used the term mobile edge computing (MEC), with the idea as

noted here that it would be associated with cellular base stations. Realizing

that edge computing is more broadly applicable but having gotten hitched to

the MEC abbreviation, they found another word starting with m. Other

organizations have used the term fog computing instead of edge computing. For

me, edge computing falls under the clunky umbrella term “

redistributed intelligence” that I used in 2014 to convey how processing that was once

centralized might become decentralized (for example, edge computing, as discussed

here) and vice versa (for example, cloud/centralized RAN).