Author

Alisha Perkins

Alisha Perkins is the global Marketing Communications manager for NXP's Advanced Driver Assist Solutions. She has worked in the semiconductor industry for the past 10 years.

As the automotive market evolves toward fully autonomous L5 vehicles, system designers have faced the incredibly difficult task of harnessing advanced machine learning (ML) technologies developed in power-hungry, datacenter-class compute environments and adapting these technologies for embedded automotive applications with limited power budgets. To achieve this, designers have been forced to navigate and port complex workflows from one environment to the other, at considerable expense and delayed time to market, in the absence of a development platform optimized specifically for AI-based ADAS applications.

NXP endeavored to meet this challenge head on when we announced our highly anticipated eIQ Auto Toolkit in October of last year. Today, we’re excited to announce that the NXP eIQ Auto Toolkit is now available to the automotive mass market. And the need for this platform couldn’t be greater.

For designers struggling to move from a development environment to AI application implementations—while simultaneously meeting stringent automotive safety and reliability standards—the NXP eIQ Auto Toolkit gives them the ability to speed up their development cycles leveraging neural networks, inference engines and NXP’s S32V processor family to maximize processing agility and performance for real-time AI automation.

These are critical capabilities for a wide range of automated driving apps, from object classification and path planning to driver/occupant monitoring, powertrain optimization and more. This AI enablement – made possible in part by advanced deep learning capabilities for vision, LIDAR and RADAR technologies – will help to usher in a new era of automotive safety, intelligence and eco-friendliness.

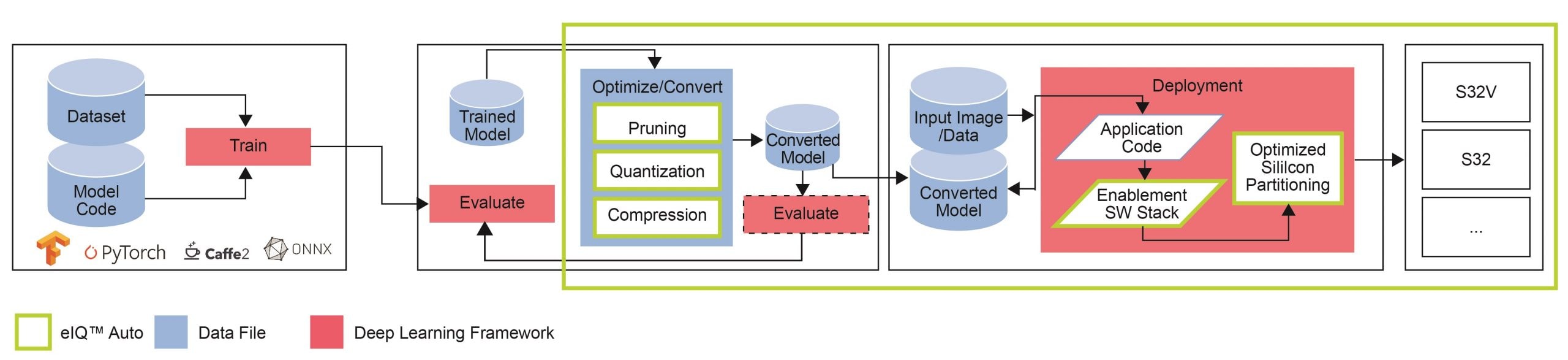

NXP’s eIQ Auto Toolkit helps designers convert and fine tune their AI models leveraging familiar platforms and libraries such as TensorFlow, Caffe* and/or PyTorch to port their deep learning training frameworks to high-performance, automotive-grade NXP processors, such as the S32V processor family. By utilizing pruning and compression techniques, neural networks can be optimized for maximum efficiency.

One of the key advantages of NXP’s eIQ Auto Toolkit is that it helps designers avoid the cost and time penalties that would otherwise arise if they had to designate and program onboard compute engines for each and every layer of a deep learning algorithm—an extremely cumbersome process. Instead, designers can easily provision task scheduling to CPU cores and accelerators in a way that matches each individual algorithm layer to the best suited compute engine. This helps to maximize processing efficiency while conserving power for other functions.

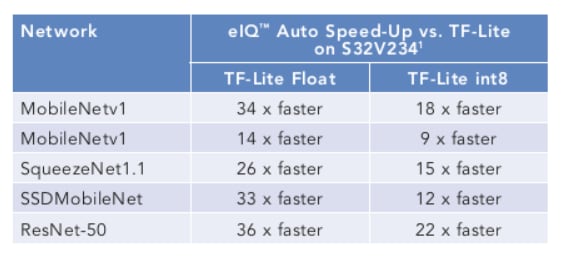

The resulting boost in performance is enormous. It leads to over 30X higher performance for given models compared to other embedded deep learning frameworks, based on NXP’s internal benchmarking using single-thread TensorFlow Lite models with floating point computation versus an eIQ quantized version running on dual APEX-2 vision accelerator cores on an NXP S32V234. The results speak for themselves and demonstrate how NXP’s eIQ Auto DL Toolkit will help automotive system designers.

With NXP’s eIQ Auto Toolkit, these designers also

have the confidence that they’re tapping into a well-established

technology platform. The toolkit is a specially designed evolution of

NXP’s eIQ (“edge intelligence”) machine learning software

development environment. Widely deployed today across a broad range of

advanced AI development applications, NXP’s eIQ software leverages

inference engines, neural network compliers and optimized libraries for easier

system-level application development and machine learning algorithm enablement

on NXP processors.

With NXP’s eIQ Auto Toolkit, these designers also

have the confidence that they’re tapping into a well-established

technology platform. The toolkit is a specially designed evolution of

NXP’s eIQ (“edge intelligence”) machine learning software

development environment. Widely deployed today across a broad range of

advanced AI development applications, NXP’s eIQ software leverages

inference engines, neural network compliers and optimized libraries for easier

system-level application development and machine learning algorithm enablement

on NXP processors.

NXP’s eIQ Auto Toolkit is available to designers

today. So if you’ve been frustrated with slow, cumbersome and expensive

development cycles for next-generation AI automotive apps, help is on the way!

(*) Roadmap item

1 Based on Internal NXP benchmarks. Comparisons using single thread Tensor Flow TF Lite quantized model running on the Arm Cortex-A53 at 1 GHz versus eIQ Auto version of the model, running on dual APEX2 on S32V234.

Global Marketing Communications Manager

Alisha Perkins is the global Marketing Communications manager for NXP's Advanced Driver Assist Solutions. She has worked in the semiconductor industry for the past 10 years.

2020年6月19日

by Ross McOuat

2020年7月29日

by Davina Moore

2020年7月30日

by Jason Deal